Euro News

Objections were sparked when politicians in the city of Porto Allegre discovered the law they had enacted was written by OpenAI’s popular chatbot.

City lawmakers in Brazil have enacted what appears to be the nation’s first legislation written entirely by artificial intelligence (AI) – even if they didn’t know it at the time.

The experimental ordinance was passed in October in the southern city of Porto Alegre and city councilman Ramiro Rosário revealed this week that it was written by a chatbot, sparking objections and raising questions about the role of AI in public policy.

Rosário told The Associated Press that he asked OpenAI’s chatbot ChatGPT to craft a proposal to prevent the city from charging taxpayers to replace water consumption meters if they are stolen.

He then presented it to his 35 peers on the council without making a single change or even letting them know about its unprecedented origin.

“If I had revealed it before, the proposal certainly wouldn’t even have been taken to a vote,” Rosário told the AP.

The 36-member council approved it unanimously and the ordinance went into effect on November 23.

“It would be unfair to the population to run the risk of the project not being approved simply because it was written by artificial intelligence,” he added.

Setting a ‘dangerous precedent’

The arrival of ChatGPT on the marketplace just a year ago has sparked a global debate on the impacts of potentially revolutionary AI-powered chatbots.

While some see it as a promising tool, it has also caused concerns and anxiety about the unintended or undesired impacts of a machine handling tasks currently performed by humans.

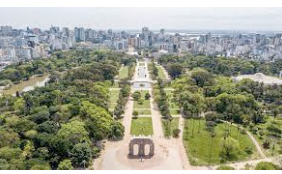

Porto Alegre, with a population of 1.3 million, is the second-largest city in Brazil’s south.

The city’s council president, Hamilton Sossmeier, found out that Rosário had enlisted ChatGPT to write the proposal when the councilman bragged about the achievement on social media on Wednesday.

Sossmeier initially told local media he thought it was a “dangerous precedent”.

The AI large language models that power chatbots like ChatGPT work by repeatedly trying to guess the next word in a sentence and are prone to making up false information, a phenomenon sometimes called hallucination.

All chatbots sometimes introduce false information when summarizing a document, ranging from about 3 per cent of the time for the most advanced GPT model to a rate of about 27 per cent for one of Google’s models, according to recently published research by the tech company Vectara.

In an article published on the website of Harvard Law School’s Center of Legal Profession earlier this year, Andrew Perlman, dean at Suffolk University Law School, wrote that ChatGPT “may portend an even more momentous shift than the advent of the Internet,” but also warned of its potential shortcomings.

“It may not always be able to account for the nuances and complexities of the law.

“Because ChatGPT is a machine learning system, it may not have the same level of understanding and judgment as a human lawyer when it comes to interpreting legal principles and precedent. This could lead to problems in situations where a more in-depth legal analysis is required,” Perlman wrote.