A controversial Stanford University academic paper has taken aim at Thomson Reuters’ and LexisNexis’ generative AI capabilities. However, problems with the study have already emerged, such as using the wrong data sets to get case law results. Artificial Lawyer takes a look.

Legal_RAG_HallucinationsThe study ‘Hallucination-Free? Assessing the Reliability of Leading AI Legal Research Tools’, conducted by the Human-Centred AI group or ‘HAI’, within the esteemed Stanford University, claims that:

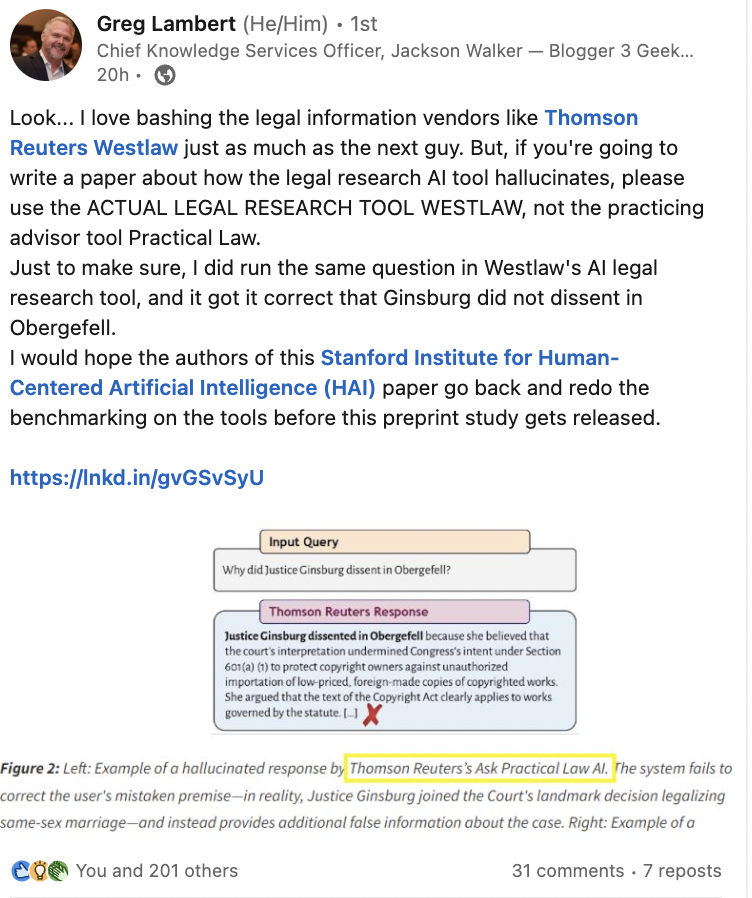

‘Overall hallucination rates are similar between Lexis+ AI and Thomson Reuters’s Ask Practical Law AI … but these top-line results obscure dramatic differences in responsiveness. As shown in Figure 4 (see below), Lexis+ AI provides accurate (i.e., correct and grounded) responses on 65% of queries, while Ask Practical Law AI refuses to answer queries 62% of the time and responds accurately just 18% of the time. When looking solely at responsive answers, Thomson Reuters’s system hallucinates at a similar rate to GPT-4, and more than twice as often as Lexis+ AI.’

However they then note:

‘Some of these disparities can be explained by the Thomson Reuters system’s more limited universe of documents. Rather than connecting its retrieval system to the general body of law (including cases, statutes, and regulations), Ask Practical Law AI draws solely from articles about legal practice written by its in-house team of lawyers. This limits the system’s coverage, as Practical Law documents cover a small fraction of legal topics.’

Read the full report at

Problematic Stanford GenAI Study Takes Aim at Thomson Reuters + LexisNexis

Also seen at Linked in